Reservoir modeling and simulation in today's high-performance computing environments

Modeling the earth's subsurface and predicting reservoir flow have always been limited by the supporting hardware and software technology. Over the last decade, significant progress has been made in addressable memory and processor speed; however, the size of models geologists and reservoir engineers require continues to push the capabilities of the available hardware/software infrastructure.

The details geologists require to accurately represent subsurface heterogeneity often results in models becoming physically very large, larger than what could reasonably be used in a 20-30 year simulation of reservoir performance.

Because most major field development decisions are based on reservoir simulation derived from these subsurface models, the quality of these models is critical to the success of any E&P operation.

A combination of new software techniques and advances in high-performance computing is providing geologists and engineers with several new options that will allow for more accurate subsurface models that can lead to better predictions of reservoir performance.

Reservoir models built by geologists tend to be built on a finer scale than that provided by seismic data but not quite to the detailed scale that is provided by well logs.

The reservoir model used by the engineer is normally coarser than the geologists' model to enable reasonably fast simulation runs over the producing life of the field but is focused on accurate representation of the elements of the geologic model that affect fluid flow.

The fact that that most geologic models are built at a scale too fine for reservoir simulation has led to a practice called "upscaling" by which the model is generally coarsened to reduce the overall number of "cells" that make up the model.

The assumption has been that the smaller the model, the faster the reservoir simulator will be able to generate a 20-30 year prediction of well and field production. The coarsening of the model can lead to reservoir simulation runs that can be completed in a matter of hours, as opposed to days.

The challenge in applying this process has been to retain the physical characteristics of the geologic model, in particular, for properties critical to fluid flow when the model is "upscaled." This process has been used with varying degrees of success for 20 years but has often led to the disconnection of the reservoir models and work process between the geoscientist and engineer.

The geoscientist's reservoir model is often "handed off" to the engineer, who will upscale the model in some cases in isolation. These errors can be compounded in the history matching process, when the engineer may adjust features in the reservoir model that help match predicted and actual flow performance but violate the geologic interpretations and data.

null

Four basic approaches that can be successfully used in reservoir simulation are available for subsurface modeling:

1. Traditional. A geologic model "upscaled" to a second model and used for reservoir simulation. The result is two models, only one of which is used for simulation.

2. Geologic. A geologic model run without coarsening in the reservoir simulator. The result is one model but with potentially very slow simulation runs.

3. Hybrid. One model generated, but with varying scale, in which the model has more detail where needed and less where it is not. The result is one model with potentially more acceptable simulation runs.

4. Multiscale. Two or more models, one fine and one coarse, linked and used simultaneously in the reservoir simulator. This is an area of industry research and would have each of the models used in the simulation as appropriate

Traditional approach

The concept of upscaling has been in use for many years and is considered by most to be a required step in reservoir simulation.

The pitfalls in the process have also been well documented and range from the distortion of geologic features like fault locations to the inappropriate lumping of layers resulting in the "averaging away" of thinner permeable zones.

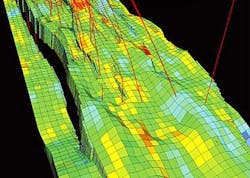

The goal of performant reservoir simulation has to be balanced with the risk of using a model that is not representative of the geologic model. The other challenge in traditional upscaling is that it generates two or more models, each of which can in many cases be edited or updated in isolation. A typical geologic scale model is shown in Fig. 1.

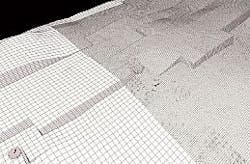

A new modeling method that has recently become available is called "clever coarsening." This new method tracks all of the fault locations and the parts of the model affected by them. It also keeps the original grid density in the area of these faults but applies the coarsening away from them.

The net result is that the original fault locations are preserved in the upscaled model while coarsening the model and reducing the overall cell count (Fig. 2). In this particular example, the number of total cells in this model increased by a factor of 3 and the time to run 20 years of production was reduced by a factor of 3.

Geologic scale only

In recent years, the increase in CPU performance as predicted by Moore's Law, and in particular, the advent of parallel computing has enabled reservoir simulation to effectively use larger and larger models.

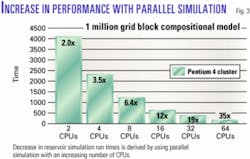

Fig. 3 shows the improvement of parallel reservoir simulator performance with additional CPUs, running a 1 million-cell model (compositional).

A few years ago, running a model of this size would have been possible on only the most powerful computers available and at a prohibitively high cost. With the decrease in cost of CPUs and increase in the performance, parallel computing has made this kind of modeling available to most E&P companies in today's world.

Geologic models, however, have also increased in size as more compute power becomes available. While there is now the class of reservoirs that can be effectively modeled on a scale that is also realistic for reservoir simulation (generally less than 6 million cells today), there are other reservoirs having geologic heterogeneity that requires models considerably larger.

For some of these reservoirs, a technique called "streamline simulation" can model the flow in a reservoir more accurately than will traditional reservoir simulators. The "price," however, is paid in reduced physics of the fluid modeling.

In addition, to understand the overall uncertainty for a given reservoir, it can sometimes be desirable to run several smaller models of the reservoir instead of one particular fine-scaled model. In conclusion, the need will continue for some form of upscaling or coarsening.

null

Hybrid models

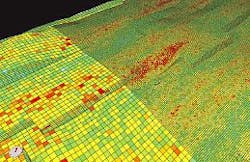

One approach that might provide a good compromise between the modeling needs of the geologist and the reservoir engineer is called hybrid gridding.

It involves the use of local grid refinement (LGR), a technique that refines the grid in specific areas where more detail is needed. With this method, the geologist and the engineer would work together to design one model that has finer grids in areas where more detail is needed and a coarser grid where it is not.

For example, a finer grid might be needed in the area around existing wells where the seismic is showing potential heterogeneity in the geology, or near fluid contacts. On the other hand, less detail would be needed in the aquifer, away from wells, or in areas where the reservoir is expected to be fairly homogeneous. This process would be carried out before the property modeling so that there would be no distortion due to re-sampling. An example of a hybrid grid is shown in Fig. 3. Where appropriate, this technique can produce one model that could support both geologic modeling and reservoir simulation.

null

Multiscale models

The most rigorous modeling approach will require more than one model at different scales, linked together with "meta-data" and potentially initialized and used as a set by the reservoir simulator.

Meta-data would be used to track which model were the parent and the relationships between the models, thus enabling a single update of the set as new data and interpretations are added.

Within the simulator, the higher resolution model would be used in conjunction with the coarse model, but only where the extra detail adds value. This technique is an area of ongoing research and could prove valuable for many situations.

Summary

The prediction of reservoir performance throughout the life of an E&P asset remains the key challenge for production geologists and reservoir engineers. This prediction needs to be evergreen, reflecting the continuously evolving state of knowledge about the property.

While the ultimate demands of this process will probably continue to outpace the capabilities of the computational and visual infrastructure that even high performance computing can provide, the new tools and methodologies described in this article will lead to models that more accurately reflect the static and dynamic properties of a reservoir.

The author

Scot Evans ([email protected]) is field development planning director in Landmark's Marketing and Systems Group. He joined Landmark in 1993 and has previously directed Landmark programs in reservoir management and integrated drilling and real-time systems. He is a graduate of Bucknell University and holds an MS in geology from the University of Texas Arlington.