Seismic progress: where the trends might lead

Projecting trends forward to predict future conditions is always dangerous. Trends never last. And projections can't account in advance for the changes that make this so. For anyone who has paid attention to progress of the past 2 decades, however, the temptation is irresistible to wonder where current trends in seismic technology will lead.

During the last 20 years of the 20th Century, those trends reshaped petroleum exploration and development. They ushered geophysics and geophysicists from the outer periphery of upstream work to the very center of the action. They pushed seismic data into roles far beyond the structural identification uses to which they earlier were confined. And they unlocked inquiry on myriad fronts into what interpreters might deduce about the subsurface from sound returns recorded on the surface or in water.

To most nongeophysicists, it is easy to appreciate the business results of these trends: cost reduction through improved drilling success rates, for example, and identification of drilling targets previously unseen on seismic records. Most nongeophysicists in the industry further recognize that the improvements came greatly from the transition from two dimensional to 3D methods for acquiring, processing, and interpreting seismic data and from the burgeoning computing power that made 3D techniques practical. Many nongeophysicists probably also appreciate the role that the interactive workstation played in this revolution.

It is, however, much less easy for nongeophysicists to understand the science and techniques that propel the seismic advance and that riddle it with such baffling phrases as "amplitude versus offset," "prestack depth migration," and "attribute analysis." Yet it is along esoteric technical paths such as these-and many others-that seismic progress now travels. It is far beyond the scope of this essay-and the ability of its author-to catalog and explain them all. The aim here is to construct a conceptual framework by which nongeophysicists might assess past achievements of the seismic industry and its innovators and thus anticipate how those achievements might shape upstream work in the 21st Century.

What seismic does

Oil and gas companies perform seismic operations to reconcile a human dependency with contrary geophysical reality. Sighted people rely heavily on vision to make sense out of physical complexity. But sight doesn't work where oil and gas reside-in the physically complex subsurface. It doesn't work because light can't go there.

Sound can. An impulse on the surface sends a wave of vibration into the subsurface. Variations in the density of rocks and the speed and direction of sound travel within them influence the wave's movement. Among these influences are reflection and refraction, which cause some of the vibrating energy to return to locations on or near the surface where they can be recorded. Those recordings-of the strengths and times of sonic returns by location-constitute seismic data.

Everything else in seismic work relates to making sense out of the recordings. From the beginning, the work has been visual. A pattern of trace excursions across an old analog shot record indicated a reflecting horizon somewhere at depth. Processing made the pattern look roughly-very roughly-as the reflecting surface might appear in a cut-away, cross-sectional view.

The construction of visual models from the sonic responses of geology has been so central to seismic work at all stages of history that it is easy now to take for granted. Yet accommodation of sonic measurement to human vision has defined many of the leaps that seismic work has taken during the past 20 years and represents one of the most important developments now in progress.

Improved resolution

Indeed, many past advances in seismic know-how amount to improvements in resolution of seismic data and in the precision of seismic images. The passage from 2D to 3D work was important here.

Seismic resolution-the ability to distinguish two nearby objects from one another-depends on sampling density and the dominant wavelength of sound at the reflecting surface. In the matter of wavelength, the contrast with light is instructive. Sound's wavelength in the subsurface, varying as a function of velocity and frequency, is often on the order of several hundreds of feet and can be greater; the wavelength of visible light is measured in tens of thousandths of an inch.

The sonic energy central to seismic methods thus cannot resolve detail to anywhere near the extent that light does. The limitation, however,

doesn't preclude improvements in resolution. For many years, seismic processors have used mathematical techniques to recompress the sonic impulse, which stretches out over time as it travels through the subsurface and interacts with recording equipment. Among other things, the recompression, called deconvolution, improves resolution in time. An active area of both practice and research, called wavelet processing, develops even more-sophisticated methods for giving the impulse an optimum temporal shape.

One of the ways that the advent of 3D techniques greatly improved seismic resolution was by exponentially increasing sampling density. A 3D survey records reflections from a single shot over an area crisscrossed by recording cables rather than along a single line, as in 2D.

The move to 3D furthermore made a crucial processing step called migration vastly more accurate than it can be in 2D. Migration repositions reflection-point locations calculated from recorded sound returns to account for various influences on sound travel in the subsurface. By allowing for correction in more than a single direction across a 3D volume of data rather than just along the line defined by receiver locations, as in 2D, migration in 3D greatly improves precision.

It also focuses resulting images by accounting for the spreading effects of a spherical wave field in ways that 2D migration cannot. The result is much more accurate location of subsurface reflecting points than is possible in 2D.

The workstation

The volumetric data set produced by 3D work led to another advance sometimes taken for granted now but not available to the earliest 3D interpreters.

The benefits of improved accuracy and better sampling, of course, were immediate when contractors shot the first commercial 3D surveys in the middle and late 1970s. But visual records were confined to two dimensions in those days. It was a step forward that with 3D data interpreters quickly became able to depict signal strengths (amplitudes) on horizontal sections complementing more-traditional vertical plots of reflections as a function of time. But the closest approximation then available of a 3D image of the subsurface was still just a sandwich of 2D cross sections linked by horizontal slices.

The view improved in the early 1980s with the emergence of interactive workstations and growth in computing power. Workstations enabled seismic interpreters to take ever-increasing visual advantage of the data volumes generated by 3D surveys. Now geophysicists became able to work with data in nearly real time and to see results in computer-screen images that could be made to appear to have three dimensions. Much more so than was possible with the paper records common earlier, seismic images began to have 3D shapes corresponding to the subsurface features that yielded them. Much of the progress in seismic methods that has occurred since the 1980s amounts to impressive refinements along many fronts of this union of 3D seismic data volumes and interactive workstations, enabled and leveraged by zooming growth in computing power. The result is an increasingly detailed, ever-more accurate, simulated 3D image of subsurface structures that exist, in fact, in three dimensions.

Into the reservoir

Seismic improvements have not been confined to imaging structure, however. Sampling and computational advances have also enabled geophysicists in some cases to deduce reservoir characteristics and rock types from seismic data-even to detect the presence of hydrocarbons.

Direct hydrocarbon indicators (DHI's) are nothing new. Their use dates back at least to the early 1970s, when interpreters began to relate characteristic amplitude patterns on seismic cross sections to the presence of gas-bearing sands. The patterns emerge because the sonic response of a sand with gas in the pore space contrasts greatly with those of the same sand containing oil or water and, in the classic case, an overlying shale. They thus form bright spots (where the response is an amplitude gain), dim spots (where the response is the reverse), and flat spots (at fluid boundaries within sands, which must be thick enough to allow for separation of the reflections).

Because of the obvious benefits to exploration and drilling efficiency, DHI's have received much attention in seismic research and development, and the techniques involved have grown in sophistication since their early days.

DHI verification, for example, is an important application of an interpretation method that receives much attention at present called amplitude variation with (or versus) offset, or AVO. With AVO, interpreters in some cases can predict reservoir characteristics by studying how reflection amplitudes change as a function of distance between the seismic source and recording location (offset).

DHI's and AVO belong to a large and rapidly growing family of techniques for assessing characteristics of rock layers between reflecting surfaces on the basis of seismic data. Development of these techniques involves learning to extract ever-more meaning from the basic information available in seismic data.

At a very basic level, that means not processing information out of the data prior to interpretation. For example, early 2D interpretation concerned itself mainly with the existence of an amplitude, or a pattern of amplitudes across a number of seismic traces, at certain reflection times. To make seismic records easy to use, a process called automatic gain control essentially equalized amplitude values in the seismic record. It wasn't until processing began preserving information about relative amplitude values that bright-spot interpretation became practical.

Similarly, more work is now performed prior to stack-or the combining of traces-than was done before the availability of computing power able to handle all the required calculations. Stack remains a fundamental way to improve the ratio of signal to noise in a seismic data set. It also produces the zero-offset data display essential to spatial interpretation. And it reduces the amount of data to which the computations involved in other processing steps, such as migration, must apply.

The stacking process, however, tends to average important information out of the data. The problem becomes acute in areas with steeply dipping structure. Migration or partial migration prior to stack preserves information of this type.

Especially with 3D migration, however, the computational requirements are extreme. It has been only since the early 1990s, when the power of computers reached necessary levels, that prestack migration in three dimensions became practical. One such application, 3D prestack depth migration, has become the main tool for seismic imaging in subsurface environments where sound velocities are subject to complex lateral change, such as those intruded by salt.

Attribute analysis

The use of relative-amplitude information and processing prior to stack are examples of ways geophysicists have found to preserve and interpret information carried in basic seismic information. Where once they used mainly the reflection times associated with the occurrence of an amplitude to interpret structure, they now increasingly use information about the amplitudes and associated wave forms themselves to interpret reservoir characteristics. To a lesser extent, they also make interpretive use of the frequency information that can be derived from seismic records. And in the future they'll probably learn to interpret a fourth type of information-attenuation, or the extent to which the sound impulse stretches and weakens in its travel through the subsurface.

These four types of information available in the seismic record-time, amplitude, frequency, and attenuation-define families of what geophysicists call "attributes," which open myriad paths for interpretation. They are far too numerous to catalog here, and the list grows rapidly. In very general terms, attribute analysis gives interpreters a variety of tools with which to derive meaning from seismic data.

Shear waves

With seismic processing and interpretation able to, in effect, dig deeper and deeper into each seismic data set to discern ever more about the subsurface, a supplementary data type recently has come into its own. It's the record of information from shear (S) waves that, except in water, accompany the compressional (P) waves more commonly used in reflection seismic work. The difference is in the direction of material motion by which the waves propagate through the subsurface-P waves as a series of pushes and pulls along the direction of wave travel, S waves as chains of sideways oscillations.

The usefulness of S wave data has long been recognized. The ratio of P and S wave velocities in a rock layer, for example, provides information about the elastic qualities of the rock and thus, in combination with other information, can sometimes determine rock type and indicate the presence of fluids. But S-wave data sets have tended to be noisy and difficult to interpret. And because S waves don't move through water, their value in marine environments was thought to be limited.

In the latter half of the 1990s, however, geophysicists have produced good results with S and P wave data recorded together in water with multicomponent, or four-component (4C), recording equipment attached to the seabottom. The four components include a geophone with two perpendicular horizontal elements plus a vertical element and a hydrophone. The horizontal elements in the geophone record S waves generated when the downward traveling P wave encounters the seabottom or, more frequently, reflecting horizons. S waves generated in this manner are called converted waves. The geophone's vertical element and the hydrophone record P wave energy.

Marine 4C surveys take advantage of differences of behavior between P and S waves. S waves, for example, travel slower than P waves. And the presence of fluid in a rock layer affects the travel of P waves but not that of S waves. Comparisons of P and S wave data, therefore, provide information not available from P wave data alone. While early 4C systems were conducted in two dimensions only, 3D 4C surveys have begun to emerge. And the step to 4C recording in marine 4D-or time-lapse 3D-surveys, now in growing use to monitor reservoir changes from production over time, is logical once costs decline.

The recent emergence of marine 4C technology demonstrates an important quality of seismic progress. Shear waves were not a breakthrough discovery of the 1990s. Their existence has been recognized for decades. What happened in the 1990s is that geophysicists armed with computing power not previously available assembled the techniques and know-how to record reflections of converted waves on the ocean bottom and to interpret their movement in the subsurface.

Seismic methods most frequently advance in that fashion-in subtle increments rather than huge breakthroughs. Exceptions to this rule from the past would have to include the transitions from 2D to 3D, from analog to digital recording, and from paper records to interactive workstations.

Large or small, each such step represents a new tool. And no one of these tools by itself ever solves the subsurface mystery. Seismic progress represents the accumulation of these tools and development of the skill of interpreters in selecting the right combination of tools for the earth problem at hand.

Visualization

The present state of the seismic art thus emerged from an elaborate tango of incremental technical development and growth in computing power. The resulting tool chest enables geophysicists to create images of the subsurface in three dimensions with vastly improved resolution. It further allows them to draw important inferences about rock layers from attributes of reflected sound waves defined by surface or marine measurements.

An extrapolation of trends might suggest that, constraints to the resolving power of sound notwithstanding, a "picture" of the subsurface is emerging. Can this be so?

The answer, of course, is no-not in the sense of an image on a computer screen that somehow "looks" like a target feature of the subsurface. The new techniques manage to get shape and location fairly close to correct. But earth scientists don't deal with snapshots and never will.

What is emerging is an amazing array of visualization tools that let processors and interpreters use human visual acuity to decipher complex numerical relationships. The emerging tools also enable people working with seismic and other types of data to escape the confines of the desktop computer integral to workstations.

Geophysical interpretation increasingly occurs in visualization centers able to provide life-size projections of images rendered from seismic and other types of data. Interpreters wearing stereoscopic glasses can view the images in 3D. In some centers, called immersive environments, users can have the sensation of walking around within the images.

The centers do not attempt to visually reproduce the subsurface, of course. Images projected in visualization centers are bright with colors, changes in which depict changes in data or data types. Images of selected surfaces can be highlighted and surrounding images rendered invisible or translucent. Artificial shading can show changes in dip azimuth. Attributes can be isolated and color-coded to show value changes. The combinations are endless.

But none of it "looks" like the subsurface. Visualization techniques-whether they occur in an immersive environment or at a workstation-move geophysical work beyond the traditional seismic function of visually modeling physical complexity. They now address the quantitative complexity associated with the visual models that seismic progress renders increasingly precise. An old trend thus takes off in a new direction.

Future steps, in fact, will transcend the visual to take advantage of human senses other than sight. Sounds of varying pitch, for example, might someday hum inside immersive centers to represent variations in seismic frequency. Through special gloves simulating touch sensations, interpreters might seem to feel changes in physical texture and pressure of target subsurface features. Someone in an immersive center thus might move through a life-scale seismic image and sensually experience changes in physical properties while monitoring sonic responses through sound and color codes. And, yes, it might even prove useful to simulate the smell of oil in appropriate places if, for example, visual cues are busy showing subtle changes in a seismic attribute.

The trends point to use of more and more of human perception in the interpretation of data from the subsurface. To repeat, however, projecting trends is always dangerous, especially in a complex and rapidly developing area such as geophysics. Just 20 years ago, when seismic interpretation meant connecting wiggles on 2D records with color pencils, talk about walking around inside seismic images would have provoked laughter.

Today's projections will no doubt fall victim to surprise. And the surprises that spoil today's projections will reveal wonderfully much about the dark realm where hydrocarbons hide.

The Author

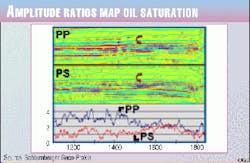

Marine 4C data used to map oil saturation

MARINE FOUR-COMPONENT (4C) seismic surveys reveal important information not just about structure but about contents of hydrocarbon reservoirs.

In a paper delivered at the 1999 Offshore Technology Conference, Jack Caldwell, manager, reservoir solutions, of Schlumberger Geco-Prakla showed how ratios of amplitudes of reflected compressional (PP) waves and converted shear (PS) waves can in some cases map hydrocarbon saturation.

The figure, from Caldwell's paper, shows North Sea data.

The curved arrows indicate seismic events associated with an oil-bearing sand extending from a fault on the right side of the plot until about location 1550. At that point, the sand becomes water-bearing.

The chart at the bottom of the figure plots the maximum amplitude of the seismic event associated with the horizon of interest.

The PP and PS amplitudes roughly track each other along the oil-bearing extent of the horizon. They diverge where the interval associated with the horizon becomes water-bearing and remain separate leftward from there.

One observer's views of multicomponent, ocean-bottom seismic methods

FOR ONE PROMINENT GEOphysicist, advantages of multicomponent, ocean-bottom seismic surveys help to define current industry progress.

Asked by Oil & Gas Journal to define important avenues for future technical advance, Ian G. Jack, geophysical adviser and R&D project manager for BP Amoco in the UK, pointed to multicomponent seismic and related techniques involving ocean-bottom acquisition as the most important areas of future technical advance.

He provided the following list of advantages of these methods:

- Surveys using ocean-bottom detectors often return additional bandwidth, both at the low and at the high frequency end of the spectrum. This has high potential for improved resolution with immediate benefit to reservoir imaging and characterization and will assist in the integration of log and seismic scales.

- Where reservoirs are obscured by overlying gas clouds or plumes, shear wave velocities vary less, and there is less "scatter" of the shear wavefield; thus the reservoir image is better, especially for the converted waves, and depth conversion is easier.

- Many reservoirs, especially sands overlain by shales, have poor p-wave reflectivities. Impedance contrasts for shear waves can often be considerably better under these circumstances, allowing geophysicists to improve the reservoir image.

- Reverberations, or "multiples," often contaminate P-wave data so badly that the images are obscured. Ocean-bottom technology can impact this in several ways. First, the combination of pressure and velocity measurement can allow discrimination between upcoming and downgoing wavefields, enabling attenuation of the downgoing free-surface multiples. Secondly, shear waves do not exist in the water column, so they have no associated "free-surface multiples" at all. Thirdly, shear waves have different velocities; thus interbed multiples occur in different places, which could allow improved reservoir imaging.

- "Bright spots" are widely used in fluid prediction. However, P-wave data cannot readily distinguish lithology bright spots from fluid bright spots. Since shear waves are mainly influenced by the lithology and not the fluids, the existence of both data sets can allow the two to be distinguished.

- Subsalt and sub-basalt imaging are notoriously difficult with P-wave data. However, the salt and basalt interfaces are likely to be good generators of converted P-to-S-wave data, providing a rich supply of additional data with which to image those interfaces.

- The amplitude ratio of P waves versus converted waves at top reservoir is sensitive to saturation in some reservoirs. From a calibration point at a well, it is then possible to map saturation away from the well.

- A much more complete data set can be acquired with fixed seabed detectors than with conventional towed-cable systems. Source-receiver distances can easily be extended both back to zero and out to larger offsets. Furthermore, a complete 360° range of azimuths can be recorded. This constitutes a step-function increase in data redundancy with consequent implications for noise attenuation and image improvement, assuming the necessary algorithms are available.

- The polarization of the converted waves and the ability to distinguish the components of shear-wave splitting will provide information on stress directions in the overburden and on fracture directions in the reservoir.

- Time-lapse seismic shows great promise as a tool which can influence reservoir management. The use of emplaced detector systems should maximize the repeatability of seismic data, allowing improved sensitivity to fluid, pressure, and temperature changes. Furthermore, the acquisition of repeat data sets then becomes much cheaper (although currently with a higher up-front cost).

- Additional refinements and developments of the technology, such as vertical cables set into the seabed, will allow even better multiple rejection and reservoir imaging.

- Emplaced detector systems may allow the use of weaker seismic sources, with signal being built up over longer periods of time rather than in one high-energy explosion (a focus of recent Greenpeace attention).

- Longer term, the emplaced-detector technology would eventually encourage implementation of shear-wave sources, thus reducing or eliminating dependence on mode-conversion as a source of shear waves.

Other advances

Progress in multicomponent, ocean-bottom techniques will build on and occur in association with incremental improvements in past technical advances, Jack said.

Among the advances he cited, 3D seismic will continue to evolve, especially in marine work where it is relatively fast and inexpensive and where new vessels tow growing numbers of cables of increasing length and have better "up time."

Attribute analysis will evolve in appraisal and development work. And seismic information is finding a role in drilling through the "geosteering" of wells.

Geophysicists, Jack noted, now produce and interpret several versions of each 3D volume and have the computer power to produce images quickly, which encourages iteration and improves data quality.

Time-lapse seismic is moving from the research stage into actual reservoir management, and multicomponent seismic is moving from 2D to 3D.

Visualization technology is helping to integrate the technical disciplines associated with exploration and production. And a growing number of data sets are being processed and presented in depth rather than time.