LEAN ENERGY MANAGEMENT—7: Knowledge management and computational learning for lean energy management

Most "energy factories," such as refineries and petrochemical plants, nuclear and electric power generation facilities, and electricity transmission and distribution systems have processes that are centrally controlled by operators monitoring supervisory control & data acquisition (SCADA) sensors distributed throughout the field operations.

The National Aeronautics & Space Administration's Mission Control exemplifies this type of control center methodology that uses computers to keep operators up to date with measurements that monitor the real-time conditions of the system, whatever it is.

A revolution in operational engineering, known collectively as "lean management," has swept through entire manufacturing industries such as automotive, aerospace, and pharmaceuticals and landed squarely in our laps, in field production environments such as upstream oil and gas exploration and production.

Just-in-time assembly, supply chain, and workflow management, and action tracking have become standard best-practices taught in virtually every business school in the world.

Lean has become synonymous with production efficiency and with continuous performance metrics feeding simulations that, in turn, drive unrelenting process improvement at companies such as Toyota, Boeing, General Electric, and Dow.

At Lexus, lean has evolved into the now famous advertising slogan: "the relentless pursuit of perfection." As information has proliferated, driven by cheap sensors and the internet, central control centers are migrating to more distributed computer support systems.

Not only is information on the state of the field operations now being delivered to all users in near real-time, but support has begun to be provided about the most efficient process control decisions to make at any given time and condition for remote sites scattered throughout the world.

A general term for this progression in computer support is, from "information" to "knowledge" management. By the turn of the century, "total plant" kinds of integrated, enterprise management systems were installed in most control centers of mega-manufacturing facilities in the world, including our downstream operations.

"Optimization of the enterprise" is beginning to be augmented with "learning systems" that "close the loop" to empower control center personnel to take actions to modify processes based upon the lessons learned from analysis of past-performance and real-time market conditions.

Modern knowledge management in 3D

This evolution in knowledge management can be illustrated in terms of common games.

The tv show "Jeopardy" can be won consistently if information is managed efficiently. A laptop computer these days can win every game. All that is required is a good encyclopedia in its information database and a "Google-like" rapid data mining capability.

Backgammon is a step up from "Jeopardy" in complexity. A computer again can be trained to win every game, but it must be programmed to deal with the uncertainties introduced by the throw of the dice. Moves must be re-computed at each move based on a changing board position.

Chess is another matter, however. Strategies, end-games, pattern recognition, and tactics must all be mastered, in addition to managing information and uncertainty. A few years ago, IBM tied for the World Chess Championship, but it required a dedicated supercomputer, "Big Blue," to sort through all possible future moves as the game progressed.

Last year, an Israeli minicomputer beat the human chess champion of the world. The machine used machine learning (ML).technologies to recognize patterns, evaluate likelihoods of success of move sequences, and optimize board positions and strengths. It did not compute every possible move for every possible contingency to the end of every possible game, as Big Blue had.

This illustrates the progression first from information management to knowledge management and now to what is called "machine learning." ML promises to further revolutionize process control in all of the diverse industries mentioned above, from aerospace and the military, to refinery and petrochemical plant control, to the pharmaceutical and automotive assembly.

How does the upstream energy industry adapt its decision support systems to this new knowledge management paradigm?

Progress in three dimensions is required. We must simultaneously climb the knowledge ladder, close the feedback loop, and add ML to our enterprise management systems.

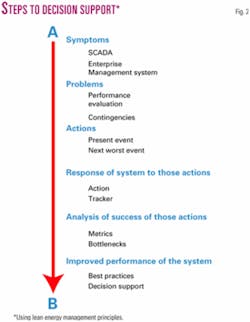

In this article we examine each plane that builds this process control cube. Progress in each plane must be coordinated if we are to get from our current state A to a future lean state B in the knowledge cube (Fig. 1).

The knowledge ladder

The best known plane of the cube for improving process control is the "knowledge ladder."

Migrating from data management to information management allows relationships among data to be defined. Adding pattern recognition converts the information to more actionable information about how the system works. But this is still not knowledge.

Knowledge requires people. There have been many failures from not recognizing this human requirement to get from information to knowledge. That said, there is a role for knowledge management tools and best-practices capture at this level, but it must be recognized and is a core concept of the lean approach, that knowledge is fundamentally in the minds of people.

Close the feedback loop

In order to attain the conversion of data into wisdom, computer models must evolve from just providing possible operational scenarios to being capable of modeling whole system performance.

Consistency and linkages among the many "silos" of management responsibility must be modeled first before they can be understood and implemented.

Then models must become dynamic rather than static. That is, time-lapse information must be used to continually update the control model with system interactions and feedback.

Finally, adaptive models have been developed that execute the process based on continually evolving evaluation of performance. The feedback loop must take incoming data, translate it into actions, evaluate the effectiveness of those actions, and then modify future actions based upon a likelihood of success.

Machine learning

Perhaps the least understood of the knowledge planes is ML.

The natural progression is from understanding data, to modeling the enterprise, to the addition of new ML technologies that learn from successes and failures so that continuous improvement is not only possible but is the operational dictum.

We know the energy industry has enough data and modeling capabilities to evolve to this new lean and efficient frontier.

Whenever an event happens, such as the Aug. 14, 2003, Northeast US blackout or a well blows out, we set up a study team that quickly reviews all incoming field data, models the system response, identifies exactly what went wrong where and when, and develops a set of actions and policy changes to prevent the event from happening again.

Progress up the ML plane requires that this data analysis, modeling, and performance evaluation be done all day every day. Then the system can be empowered to continuously improve itself before such catastrophic events happen to the system.

ML uses computer algorithms that improve actions and policies of the system automatically through learned experiences. Applications range from data mining programs that discover general rules in large data sets such as principal component analysis to information filtering systems that automatically learn users' interests.

The Support Vector Machine (SVM) is a widely used method in ML for the latter. SVMs have eclipsed neural nets, radial basis functions, and other older learning methods.

Importance to E&D

This revolution is just beginning to be spread into the exploration and development of oil and gas fields because it is difficult to execute lean energy management at remote locations where "unplanned events" are the ordinary.

Lean processes require extensive planning and simulation by integrated teams (Fig. 2). Five times more work must be done on the computer to simulate possible outcomes as is done on the physical work in the field.

It is easy for teams that are not fully integrated to fall into the "silo trap" by allocating support tasks to subgroups of narrowly focused experts. The tendency is to isolate the exploration of "what if" contingencies into those teams that are most comfortable with them.

Only by supporting lean planning with vigorous software checks and balances that require integrated inputs across all teams can this trap be avoided. Anyone who has worked a very large development project offshore can probably recognize that cost and time overruns always seem to happen because of unforeseen events. Why were they unforeseen?

"Unforeseen" also implies that contingencies to deal with them were not available. No wonder fully 35% of all deepwater development projects in the world are over budget and delivered late.

Lean energy management requires that the exploration space for the risking of problems be ever expanding, with a feedback loop so that every new event from wherever it occurs in the company's worldwide operations is captured in computer memory and can never be unforeseen again.

The current state of ultradeepwater exploration and development is a case in point. Best practices, if they exist formally at all, are isolated and not likely to be shared from project to project let alone company to company.

Proof can be immediately found in the break from lean principals: Where is the 50% savings in cost and cycle time from each project to succeeding project that lean has delivered consistently for other industries? Instead of standardization and modularization over time, we get 60% operability in the delivered product, when lean standards require 90% or better.

Profitability, even at current prices, requires better performance. Why can't we take a year off the time to first oil for each major new project in the ultradeepwater? Why aren't all projects 100% on time and to budget? How come we continue to have "train wrecks?"

Connection to LEM

Lean energy management is an enabling technology to get to these lofty goals.

For example, why can't we have earlier engagement of our concept design teams? They can then follow through the design/build process by continuing to develop scenarios for what-if events. Then, nothing will happen during fabrication that has not been anticipated, and contingency plans are already in place and trained for?

In such a lean world, customization tendencies are fought at every turn, interface issues are dealt with continuously, and everything is measured so that it can be scored and formally managed via software support. This progression is well known in other industries as a natural path up the knowledge management cube.

This description of lean energy management seems complicated, but in fact it is simple. There are five basic rules:

1. A 5:1 ratio of modeling and simulation versus field operations U compared to standard practices in our industry of a 1:5 ratio! Spend 5 times more than today in upfront computing of possible outcomes and the plan to deal with them becomes a moneymaking proposition.

2. The same 3D solid model must be used by all. Everyone has the same model, and it is updated in real time.

3. Metric everything, all the time! Only then can it be managed.

4. Constantly ask whether you are making more money? And above all else,

5. Long-term commitment from management is required. If you say you are already doing 1 to 3 but are not saving 50% in both cycle time and costs, then your company hasn't gotten the concept of lean yet.

Proof of concept: lean exploration drilling

The history of lean implementation says that relatively small-scale pilot projects make the best way to begin to learn. Do not bite off too big a project at the start.

For example, consider exploration drilling in the deep water. Each well costs a minimum of tens of millions of dollars. With lean energy management and a success rate exceeding 60% because of improved subsurface hydrocarbon imaging, faster and cheaper quickly converts to improved profitability because you are drilling more successful wells each year.

The things in exploration drilling that really catch us are the unplanned events. We are used to operating in teams, but teams tend to wait until an event dictates the next move rather than planning for contingencies all day every day, as in the electricity control business, for example.

A common problem with exploration wells is that we could have planned the recovery process for mishaps, but we didn't anticipate them in the first place. This is a common lean problem that requires progression from reactive, to predictive, and then preventive planning.

The key is getting out of the box that only examines routine contingencies that are well known.

As an industry, we work the basic well to the nth degree. However, everything that goes wrong with an exploration well typically sits outside this box, and this is where we really need lean processes to learn how to do better contingency planning.

We must learn to minutely challenge every step of the exploration well, but siloed teams have led to a large degree of isolation, lack of integration, and no real challenge to the status-quo. Managing the basic tasks is done well, but since lean integration has never taken place, operational changes are not adequately dealt with by other silos.

To compound the situation, probabilistic costing is based on managing the basic planned risks and contingencies that we recognize. We are good at addressing risk all the way up to spud. However, the end result after spud is consistently out of plan and over budget. And to prove that we do not learn well from our lessons, we don't metric our performance after spud very well at all. We can never manage what we don't measure!

At Columbia, we have developed a series of implementation steps to migrate a company that is already good at knowledge management to process optimization and lean energy management using ML. Consider the below demonstration project for a proof-of-concept for a lean exploration drilling campaign.

We begin by populating a series of matrices that are adept at learning, which we call suitability matrices because they describe the sequence from symptoms-to-problems and then problems-to-solutions for any given contingency.

Composition and hierarchy are derived through the matrix formalization and used to guide the ML feedback loop towards optimal decision support. We map the "as is" processes before using the ML algorithms and human-in-the-loop feedback to recommend the "to be" state.

Lean is a people process

Transparency to humans-in-the-loop is required for acceptance in any organization. That means that all participating decision makers must be able to see the benefits all along the way. No logic can be hidden from subcontractors, a tough hurdle for our industry.

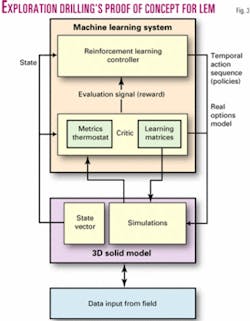

We then use multiple runs of computer models to either simulate outcomes, or in real-time, capture the outcomes from decision making (Fig. 3). We feed both into an ML system to create the feedback loop.

Supervised learning begins with a training dataset of inputs and outcomes. For each trial, the learning algorithm is given the input data, asked to predict an outcome, and scored against the testing dataset. The goal is to minimize the number of mistakes, not converge on a 100% correct answer.

ML and data mining technologies turn this input, along with outcomes and scoring, into decision support policies and recommended actions (knowledge).

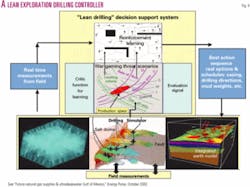

The critical element in lean exploration drilling is a 3D solid earth model of the entire space from surface to beneath the deepest potential reservoir (Fig. 4). Seismic technologies can describe this volume in velocity, density, and reflectance space quite well. Inputs consist of drilling variables all along potential well path scenarios.

A drilling simulator model is run over and over to explore the contingencies of possible wells, including costs and real options all along the way. The outputs are fed into an ML system that determines rules for optimal actions and policies for success.

This form of ML is known as reinforcement learning, a type of dynamic programming in computer lingo. The ML system provides the critic function and tracks success using a "metrics thermostat" approach described in our earlier articles in this series.

The establishment of the feedback loop for continuous improvement using real options is then the key new ingredient added by lean processes. All actions are tracked in the software, and updates are automatically provided to all.

The lean exploration drilling controller can also be configured as a computer-based simulation tool for training drillers to make optimal real-time decisions. Further, the controller can be configured as an adaptive aiding system, which is useful for additional computer-based training (think flight simulator).

The controller can be used to advise or guide operators on what actions to take in the same manner that common car navigation systems are used to guide or direct car drivers. The controller also continually tracks the current state of the drilling systems to provide look-ahead contingency analyses, a common practice in other industries.

Our proof-of-concept lean exploration drilling system is configured to evaluate opportunities as real options (see previous parts of this series). The learning system uses feedback to generate actions or decisions that are always in the money (i.e., a martingale in business terms) with respect to both financial profitability and engineering efficiency.

The "martingale" learning system, tied back to the drilling simulator, can be configured to display remote subsea decisions in real-time affecting the form and timing of drilling events in exploration drilling campaigns. An integrated 3D earth model representing reservoir and all hazards in between is developed that produces this simulation.

The 3D model includes production constraints based, for example, on pore pressure ranges likely to be encountered by each well. The martingale controller is trained to generate flexible drilling schedules that honor production constraints and produce exemplary cost and delivery times. The flexible drilling schedules are optimized on the basis of total economic value determined by real options.

Summary

Lean management has steadily evolved from simple process control to information and then knowledge management and currently to real-time optimization of the product being manufactured.

Lean systems not only tell the decision maker what might happen next, but they also present contingency information in a clear and concise way at all times.

Operators particularly need help when multiple areas have significant problems at the same time, and lean decision support provides not only what is likely to happen next but also what are the risks and ramifications of different preventive remediation sequences.

"Pain indices" that record actions taken then provide a basis for future ML so that the system gets better and better at its decision support and training jobs.

The lean exploration drilling system learns to drill better and more efficient wells, faster and safer, as time goes by.

Further reading

"Smart Grids and the American Way," ASME Power and Energy, March 2004.

"Building the Energy Internet," Economist, Mar. 11, 2004.

Oil & Gas Journal lean energy management series, June 28, 2004, and Mar. 19, May 19, June 30, Aug. 25, and Nov. 24, 2003.

The authors

Roger N. Anderson ([email protected]) is Doherty Senior Scholar at Lamont-Doherty Earth Observatory, Columbia University, Palisades, NY. He is also director of the Energy and the Environmental Research Center (EERC). His interests include marine geology, 4D seismic, borehole geophysics, portfolio management, real options, and lean management.

Albert Boulanger is senior computational scientist at the EERC at Lamont-Doherty. He has extensive experience in complex systems integration and expertise in providing intelligent reasoning components that interact with humans in large-scale systems. He integrates numerical, intelligent reasoning, human interface, and visualization components into seamless human-oriented systems.